The problems

There is always a problem. In my case, I had two problems.

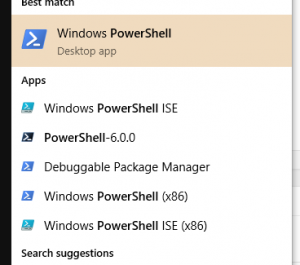

First, when I teach PowerShell, I mention that it’s a nice language for writing DSLs (domain-specific languages). If you want an in-depth look, Kevin Marquette has a great series on writing DSLs. I highly recommend reading it (and everything else he’s written). I’m going to cover some of the same material he did, but differently.

Anyway, back to the story. When I mention DSLs, I generally get a lot of blank stares. Then, I get to try to explain them, but I don’t have a great example (Pester, Psake, and DSC are a bit advanced). So I was looking for a DSL I could write that would be easy to explain, with the code and output straight-forward. That’s the first problem.

The second problem, again from teaching, is when I talk about writing GUIs. This is always a popular topic, and it’s a lot of fun to discuss the different options. I get asked about when it is a good idea to write a UI in PowerShell vs. when it would make more sense to do it in a managed language. My answer is something along the lines of “If it’s something simple like a data-entry form, then PowerShell is a great fit. If it has much complexity you are probably going to want to use C#.” I got thinking after teaching last November that writing a data-entry form in PowerShell really isn’t that easy.

PowerShell to the rescue!

I decided that I needed to remedy the situation. Writing a data-entry form (where we’re not super concerned about the look-and-feel) should be trivial.

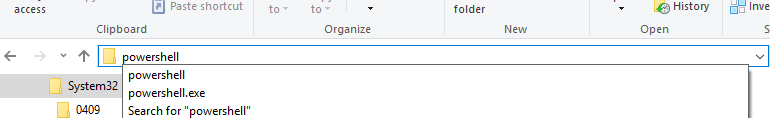

My first thought was that I should be able to write something like this:

Window {

TextBox FirstName

TextBox LastName

TextBox EmailAddress

}

And have PowerShell present you with a reasonable looking UI. After pressing Ok (Ok/Cancel would be added automatically), it would output an object with properties named FirstName, LastName, and EmailAddress.

The first swag at the code looked something like this (fwiw, this is a reconstruction, not the actual code).

First, the TextBox function:

function TextBox {

[CmdletBinding()]

Param($Name)

$Properties = @{ Name = $name ;MinWidth=100}

New-Object System.Windows.Controls.TextBox -Property $properties

}

That’s pretty simple. It takes the name as a parameter and outputs a textbox with that name.

The Window function is a bit trickier. The controls (textboxes and labels) are going to go in a 2-column grid (so we don’t have to fight hard with the layout).

Also, for now I’m going to leave off the ok/cancel buttons (so I also have to pretend you pressed ok), but this is a great start.

Here’s attempt 1 at the code:

Add-Type -AssemblyName PresentationFramework

function Window {

[CmdletBinding()]

Param($contents)

$window = new-object system.windows.window -Property @{ SizeToContent = 'WidthAndHeight'}

$grid = new-object System.Windows.Controls.Grid -Property @{Margin=10}

$window.Content = $grid

$grid.ColumnDefinitions.Add((new-object System.Windows.Controls.ColumnDefinition -property @{ Width = 'Auto'}))

$grid.ColumnDefinitions.Add((new-object System.Windows.Controls.ColumnDefinition -property @{ Width = '*'}))

$controls = & $Contents

$row = 0

foreach ($item in $controls) {

$grid.RowDefinitions.Add((new-object System.Windows.Controls.RowDefinition ))

$label = New-Object System.Windows.Controls.Label -Property @{Content = $item.Name}

[System.Windows.Controls.Grid]::SetRow($label, $row)

[System.Windows.Controls.Grid]::SetColumn($label, 0)

$grid.Children.Add($label) | out-null

[System.Windows.Controls.Grid]::SetRow($item, $row)

[System.Windows.Controls.Grid]::SetColumn($item, 1)

$grid.AddChild($item) | Out-Null

$row++

}

if($window.ShowDialog() -or $true){

$output=[Ordered]@{}

foreach($item in $controls){

$output[$item.Name]=$item.Text

}

[PSCustomObject]$output

}

}

Most of the code is pretty straight-forward. I execute the $Contents parameter to get the controls. Then I loop through those and create labels in the first column and put the control in the second column.

After calling the ShowDialog() method on the window (and using -or $true to fake out the missing OK button), loop through the controls and output an object with properties and values harvested from the name and text of the controls.

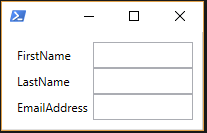

Here’s what the example looks like when you run it:

Not a lot of code for that result.

What’s missing though?

- Only textboxes? Need several other controls

- OK/Cancel

- with other controls, reading the text property won’t be a strategy

- Nested controls

- Populating controls

- Events

- ContextMenus

- Setting control properties

Stay tuned for more of this WPF DSL.

–Mike

P.S. It has been pointed out to me that I didn’t explain what a DSL is. For now, I’d point you to the Kevin Marquette articles I linked above. I’ll post tomorrow with a quick “what is a DSL and why”.

N.B. This is just a quick note to relate something I ran into in the last couple of weeks. Not an in-depth discussion of reflection.

N.B. This is just a quick note to relate something I ran into in the last couple of weeks. Not an in-depth discussion of reflection.